NER = 命名实体识别

数据集:Kaggle:Annotated Corpus for Named Entity Recognition

import pandas as pd

from tensorflow import keras

import numpy as np

用Pandas

df = pd.read_csv('ner_dataset.csv',encoding='unicode-escape')

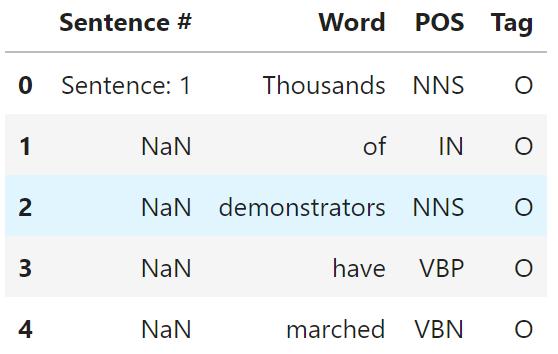

df.head()

输出:

获唯一标签,创建查找字典(标签转成类别编号)

tags = df.Tag.unique()

tags

输出:

array(['O', 'B-geo', 'B-gpe', 'B-per', 'I-geo', 'B-org', 'I-org', 'B-tim',

'B-art', 'I-art', 'I-per', 'I-gpe', 'I-tim', 'B-nat', 'B-eve',

'I-eve', 'I-nat'], dtype=object)

id2tag = dict(enumerate(tags))

tag2id = { v : k for k,v in id2tag.items() }

id2tag[0]

输出:'0'

对词表操作,创建不考虑词汇的词表(实战要用Keras向量化器,限制词汇数量)

vocab = set(df['Word'].apply(lambda x: x.lower()))

id2word = { i+1 : v for i,v in enumerate(vocab) }id2word[0] = '<UNK>'vocab.add('<UNK>')

word2id = { v : k for k,v in id2word.items() }

创还能用来训练句子的数据集,遍历原始数据集,独立句子分成X(词列表)和Y(标签列表)

X,Y = [],[]

s,t = [],[]

for i,row in df[['Sentence #','Word','Tag']].iterrows():

if pd.isna(row['Sentence #']):

s.append(row['Word'])

t.append(row['Tag'])

else:

if len(s)>0:

X.append(s)

Y.append(t)

s,t = [row['Word']],[row['Tag']]X.append(s)Y.append(t)

向量化所有单词和token:

def vectorize(seq):

return [word2id[x.lower()] for x in seq]

def tagify(seq):

return [tag2id[x] for x in seq]

Xv = list(map(vectorize,X))Yv = list(map(tagify,Y))

Xv[0], Yv[0]

输出:

([10386,

23515,

4134,

29620,

7954,

13583,

21193,

12222,

27322,

18258,

5815,

15880,

5355,

25242,

31327,

18258,

27067,

23515,

26444,

14412,

358,

26551,

5011,

30558],

[0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 2, 0, 0, 0, 0, 0])

所有句子用0填充到最大长度(实战要用别的策略,本实验只是简化版)

X_data = keras.preprocessing.sequence.pad_sequences(Xv,padding='post')

Y_data = keras.preprocessing.sequence.pad_sequences(Yv,padding='post')

定义标记分类网络(Token分类网络):

双层双向LSTM:输入(词嵌入序列),输出(时间步LSTM输出用TimeDistributed层用相同的全连接分类器)

return_sequences=True:LSTM返回时间步输出

TimeDistributed:全连接层独立处理时间步特征

代码:

maxlen = X_data.shape[1]vocab_size = len(vocab)num_tags = len(tags)model = keras.models.Sequential([

keras.layers.Embedding(vocab_size, 300, input_length=maxlen),

keras.layers.Bidirectional(keras.layers.LSTM(units=100, activation='tanh', return_sequences=True)),

keras.layers.Bidirectional(keras.layers.LSTM(units=100, activation='tanh', return_sequences=True)),

keras.layers.TimeDistributed(keras.layers.Dense(num_tags, activation='softmax'))])

model.compile(loss='sparse_categorical_crossentropy',optimizer='adam',metrics=['acc'])model.summary()

输出:

Model: "sequential_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding_4 (Embedding) (None, 104, 300) 9545400

bidirectional_6 (Bidirectio (None, 104, 200) 320800

nal)

bidirectional_7 (Bidirectio (None, 104, 200) 240800

nal)

time_distributed_3 (TimeDis (None, 104, 17) 3417

tributed)

=================================================================

Total params: 10,110,417

Trainable params: 10,110,417

Non-trainable params: 0

固定序列长度:指定maxlen通义输出长度,但是不能处理现场序列(可能引入冗余填充)

变长序列处理:Masking层或动态填充(按批次处理),调整网络结构支持动态输入

model.fit(X_data,Y_data)

输出:<keras.callbacks.History at 0x16f0bb2a310>

训练结果:

sent = 'John Smith went to Paris to attend a conference in cancer development institute'

words = sent.lower().split()

v = keras.preprocessing.sequence.pad_sequences([[word2id[x] for x in words]],padding='post',maxlen=maxlen)

res = model(v)[0]

r = np.argmax(res.numpy(),axis=1)

for i,w in zip(r,words):

print(f"{w} -> {id2tag[i]}")

输出:

john -> B-per

smith -> I-per

went -> O

to -> O

paris -> B-geo

to -> O

attend -> O

a -> O

conference -> O

in -> O

cancer -> B-org

development -> I-org

institute -> I-org

扩展学习:

-

医学术语NER模型实验