预训练模型:已经在大数据集训练好的神经网络(ImageNet训练的CNN),要求可提取特征(边缘,纹理)

迁移学习:利用预训练模型,微调或固定特征提取器(只训练顶层分类器),适配到新任务,避免从头训练

微调:只调整部分层

预训练+迁移学习的好处:

-

节省时间数据:不用重新学习底层特征(卷积滤波器),少量数据就可在新任务上取得好效果

-

泛化性强:预训练模型提取通用特征,适用与多种图形任务(分类,检测)

步骤:

-

预训练模型(ResNet,VGG)作特征提取器

-

冻结卷积层:保留已学特征,替换,训练顶层分类器

-

微调部分卷积层适配新数据特性(可选步骤)

import torch

import torch.nn as nn

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as pltfrom torchinfo import summary

import numpy as npimport os

from pytorchcv import train, plot_results, display_dataset, train_long, check_image_dir

分辨猫狗:

https://www.kaggle.com/c/dogs-vs-cats

提取保存在data字典里:

if not os.path.exists('data/kagglecatsanddogs_5340.zip'):

!wget -P data https://download.microsoft.com/download/3/E/1/3E1C3F21-ECDB-4869-8368-6DEBA77B919F/kagglecatsanddogs_5340.zip

import zipfile

if not os.path.exists('data/PetImages'):

with zipfile.ZipFile('data/kagglecatsanddogs_5340.zip', 'r') as zip_ref:

zip_ref.extractall('data')

清洗数据:

check_image_dir('data/PetImages/Cat/*.jpg')

check_image_dir('data/PetImages/Dog/*.jpg')

输出:

Corrupt image: data/PetImages/Cat/666.jpg

Corrupt image: data/PetImages/Dog/11702.jpg

加载图片到PyTorch数据集,转化成tensor,规格化,用std_normalize变换到预训练VGG模型训练时用的数据分布范围(确保输入数据和模型权重的训练条件一致)

代码:

std_normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])trans = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

std_normalize])dataset = torchvision.datasets.ImageFolder('data/PetImages',transform=trans)trainset, testset = torch.utils.data.random_split(dataset,[20000,len(dataset)-20000])

display_dataset(dataset)

检查预训练模型:

vgg = torchvision.models.vgg16(pretrained=True)

sample_image = dataset[0][0].unsqueeze(0)res = vgg

(sample_image)print(res[0].argmax())

结果:tensor(282) //ImageNet的类数量

import json, requests

class_map = json.loads(requests.get("https://s3.amazonaws.com/deep-learning-models/image-models/imagenet_class_index.json").text)

class_map = { int(k) : v for k,v in class_map.items() }

class_map[res[0].argmax().item()]

输出:

['n02123159', 'tiger_cat']

VGG-16网络的架构:

['n02123159', 'tiger_cat']

输出:略

Dropout层:训练时随机关闭部分神经元(大约30%),参数有:丢弃率p(一般0.2-0.5,输入层低=0.1,全连接层高=0.5),p=0.3就是关闭30%神经元

GPU加速:深度神经网络如VGG-16计算量大,可借用GPU;PyTorch默认用CPU,要手动把数据和模型迁移到GPU

步骤:

检查GPU是否可用:

import torch

device = "cuda" if torch.cuda.is_available() else "cpu"

设备变量:device选cuda / cpu

迁移数据和模型:

model = model.to(device) # 模型移至GPU

inputs = inputs.to(device) # 数据张量移至GPU

labels = labels.to(device)

(注意:模型和数据要在同一个设备,GPU内存有限要控制批量大小batch_size避免内存溢出)

微软参考的代码:

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print('Doing computations on device = {}'.format(device))

vgg.to(device)

sample_image = sample_image.to(device)

vgg(sample_image).argmax()

输出:tensor(282, device='cuda:0')

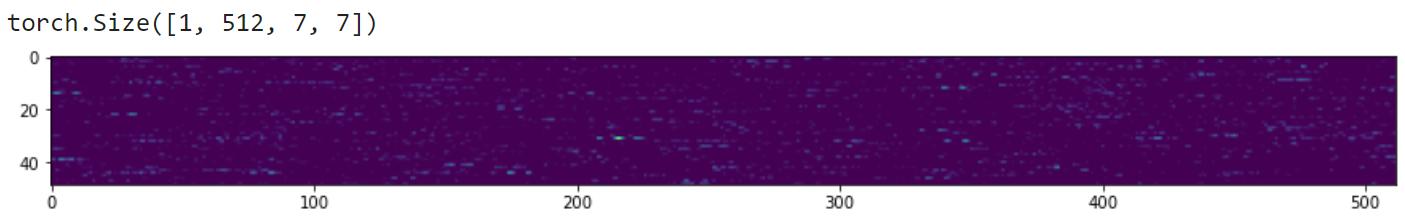

提取VGG特征:要移除VCC-16最终分类层,用vgg.features直接得特征提取器

res = vgg.features(sample_image).cpu()

plt.figure(figsize=(15,3))

plt.imshow(res.detach().view(512,-1).T)

print(res.size())

用VGG-16特征进行图像分类的步骤:

-

特征维度调整:512x7x7=通道x高度x高度;重塑成2D(512x49=样本数x特征维度)

-

数据准备:手动选取n张图像(800张),用预训练卷积层提取特征

-

预计算特征向量:用vgg.features提取每张图像的特征(要避免重复计算),存储特征(所有特征向量整合到feature_tensor中,维度800x特征维度),存储标签(对应标签整合到labe_tensor,维度800x1)

bs = 8

dl = torch.utils.data.DataLoader(dataset,batch_size=bs,shuffle=True)

num = bs*100

feature_tensor = torch.zeros(num,512*7*7).to(device)

label_tensor = torch.zeros(num).to(device)

i = 0

for x,l in dl:

with torch.no_grad():

f = vgg.features(x.to(device))

feature_tensor[i:i+bs] = f.view(bs,-1)

label_tensor[i:i+bs] = l

i+=bs

print('.',end='')

if i>=num:

break

定义vgg_dataset,用random_split()分离tensor数据到训练集和测试集,在特征根上训练单层次稠密分类器网络:

vgg_dataset = torch.utils.data.TensorDataset(feature_tensor,label_tensor.to(torch.long))

train_ds, test_ds = torch.utils.data.random_split(vgg_dataset,[700,100])

train_loader = torch.utils.data.DataLoader(train_ds,batch_size=32)test_loader = torch.utils.data.DataLoader(test_ds,batch_size=32)

net = torch.nn.Sequential(torch.nn.Linear(512*7*7,2),torch.nn.LogSoftmax()).to(device)

history = train(net,train_loader,test_loader)

输出:分辨猫狗的准确率98%,仅用了很小部分图像训练出来的

Epoch 0, Train acc=0.879, Val acc=0.990, Train loss=0.110, Val loss=0.007

Epoch 1, Train acc=0.981, Val acc=0.980, Train loss=0.015, Val loss=0.021

Epoch 2, Train acc=0.999, Val acc=0.990, Train loss=0.001, Val loss=0.002

Epoch 3, Train acc=1.000, Val acc=0.980, Train loss=0.000, Val loss=0.002

Epoch 4, Train acc=1.000, Val acc=0.980, Train loss=0.000, Val loss=0.002

Epoch 5, Train acc=1.000, Val acc=0.980, Train loss=0.000, Val loss=0.002

Epoch 6, Train acc=1.000, Val acc=0.980, Train loss=0.000, Val loss=0.002

Epoch 7, Train acc=1.000, Val acc=0.980, Train loss=0.000, Val loss=0.002

Epoch 8, Train acc=1.000, Val acc=0.980, Train loss=0.000, Val loss=0.002

Epoch 9, Train acc=1.000, Val acc=0.980, Train loss=0.000, Val loss=0.002

用VGG网络迁移学习:

print(vgg)

结果:略

VGG-16模型调整步骤:

-

模型结构解析:特征提取器features(卷积层+池化层),平均池化层avgpool(特征图压缩为固定尺寸,25088维向量),原分类器classifier(全连接层,输出ImageNet的1000个类别)

-

自定义分类任务调整:原分类器换成新线性层(nn.Linear(25088,2),输入25088维,输出2类),冻结特征提取权重(防止预训练特征提取层在训练初期被破坏)

vgg.classifier = torch.nn.Linear(25088,2).to(device)

for x in vgg.features.parameters():

x.requires_grad = False

summary(vgg,(1, 3,244,244))

结果:略

模型训练要点:

-

模型参数:从参数1500万,可训练参数5万(分类层权重,其它参数如特征提取器被冻结)-> 冻结预训练层可避免过拟合,用于小数据集微调

-

用train_long():实时打印训练中间结果,不用等完整epoch结束

-

必须用GPU

trainset, testset = torch.utils.data.random_split(dataset,[20000,len(dataset)-20000])

train_loader = torch.utils.data.DataLoader(trainset,batch_size=16)

test_loader = torch.utils.data.DataLoader(testset,batch_size=16)

train_long(vgg,train_loader,test_loader,loss_fn=torch.nn.CrossEntropyLoss(),epochs=1,print_freq=90)

结果:略,观察猫狗分类器准确度95%-97%

保存:

torch.save(vgg,'data/cats_dogs.pth')

下次再加载:

vgg = torch.load('data/cats_dogs.pth')

微调迁移学习(fine-tuning):

再训练特征提取器,解冻被冻结的卷积参数(最好先解冻,然后测试几个训练epoch,可以稳定分类层权重,然后再训练端对端网络;不然容易出错,破坏卷积层预训练权值)

for x in vgg.features.parameters():

x.requires_grad = True

解冻之后,测试几个epoch,选低学习率(减少对预训练权值影响),会运行得很慢(运行到可看出趋势就可以手动结束)

train_long(vgg,train_loader,test_loader,loss_fn=torch.nn.CrossEntropyLoss(),epochs=1,print_freq=90,lr=0.0001)

结果:

Epoch 0, minibatch 0: train acc = 1.0, train loss = 0.0 Epoch 0, minibatch 90: train acc = 0.8990384615384616, train loss = 0.2978392171335744 Epoch 0, minibatch 180: train acc = 0.9060773480662984, train loss = 0.1658294214069514 Epoch 0, minibatch 270: train acc = 0.9102859778597786, train loss = 0.11819224340009514 Epoch 0, minibatch 360: train acc = 0.9191481994459834, train loss = 0.09244130522920814 Epoch 0, minibatch 450: train acc = 0.9261363636363636, train loss = 0.07583886292451236 Epoch 0, minibatch 540: train acc = 0.928373382624769, train loss = 0.06537413817456822 Epoch 0, minibatch 630: train acc = 0.9318541996830428, train loss = 0.057419379426257924 Epoch 0, minibatch 720: train acc = 0.9361130374479889, train loss = 0.05114534460059813 Epoch 0, minibatch 810: train acc = 0.938347718865598, train loss = 0.04657612246737968 Epoch 0, minibatch 900: train acc = 0.9407602663706992, train loss = 0.04258851655712403 Epoch 0, minibatch 990: train acc = 0.9431130171543896, train loss = 0.03927870595491257 Epoch 0, minibatch 1080: train acc = 0.945536540240518, train loss = 0.03652716609309053 Epoch 0, minibatch 1170: train acc = 0.9463065755764304, train loss = 0.03445258006186286 Epoch 0 done, validation acc = 0.974389755902361, validation loss = 0.005457923144233279

除了VGG-16,torchvision包还支持其他计算机版本的预训练网络,用的最多是ResNet架构(ResNet-18模型入门,进阶151)

用ResNet-18

resnet = torchvision.models.resnet18()

print(resnet)

过程:略

批量归一化:略