Dropout定义:

神经网络防止过拟合的正则化技术,原理是在

-

训练阶段中随机关闭一部分神经元(暂时置零输出),让模型不过度以来某神经元,增加泛化能力

-

测试阶段中所有神经元保持激活,缩放权重(乘以保留概率1-p),保持稳定输出期望值

数据集用MNIST + 卷积神经网络

from tensorflow import keras

import numpy as np

import matplotlib.pyplot as plt

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()

x_train = x_train.astype("float32") / 255

x_test = x_test.astype("float32") / 255

x_train = np.expand_dims(x_train, -1)

x_test = np.expand_dims(x_test, -1)

定义train函数:

def train(d):

print(f"Training with dropout = {d}")

model = keras.Sequential([

keras.layers.Conv2D(32, kernel_size=(3, 3), activation="relu", input_shape=(28,28,1)),

keras.layers.MaxPooling2D(pool_size=(2, 2)),

keras.layers.Conv2D(64, kernel_size=(3, 3), activation="relu"),

keras.layers.MaxPooling2D(pool_size=(2, 2)),

keras.layers.Flatten(),

keras.layers.Dropout(d),

keras.layers.Dense(10, activation="softmax")

])

model.compile(loss='sparse_categorical_crossentropy',optimizer='adam',metrics=['acc'])

hist = model.fit(x_train,y_train,validation_data=(x_test,y_test),epochs=5,batch_size=64)

return hist

res = { d : train(d) for d in [0,0.2,0.5,0.8] }

训练过程:略

绘图:

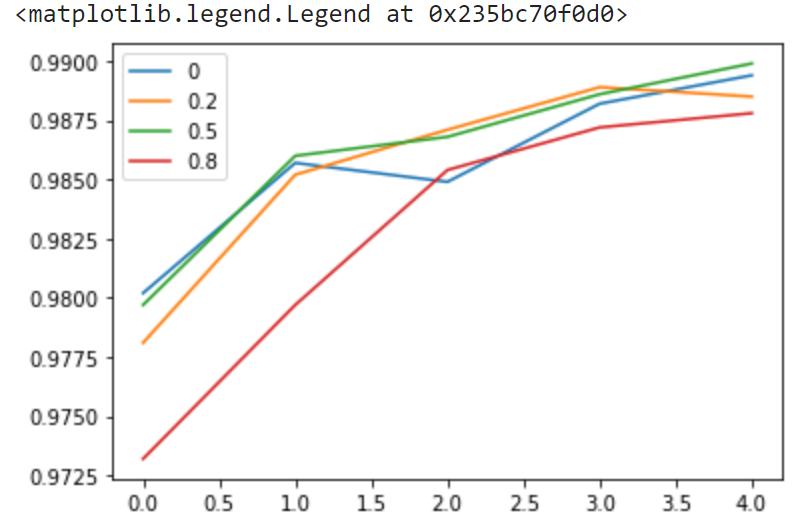

for d,h in res.items():

plt.plot(h.history['val_acc'],label=str(d))

plt.legend()

结论:

-

最佳Dropout范围:0.2-0.5,训练速度最快,效果最好

-

无Dropout:d=0,不稳定速度慢

-

高Dropout:d=0.8,很差