可视化看做成理想的猫

加载VGG网络:

import tensorflow as tf

from tensorflow import keras

import matplotlib.pyplot as plt

import numpy as np

from IPython.display import clear_output

from PIL import Image

import jsonnp.set_printoptions(precision=3,suppress=True)

model = keras.applications.VGG16(weights='imagenet',include_top=True)

classes = json.loads(open('imagenet_classes.json','r').read())

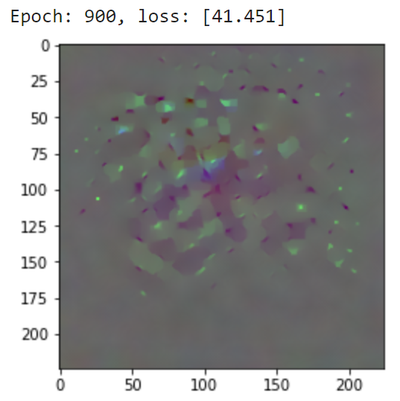

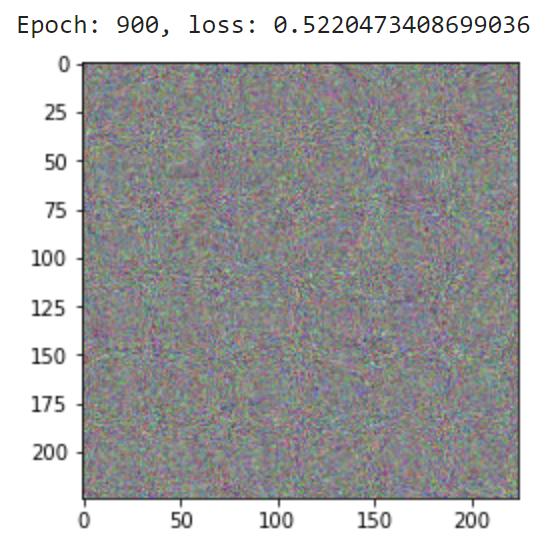

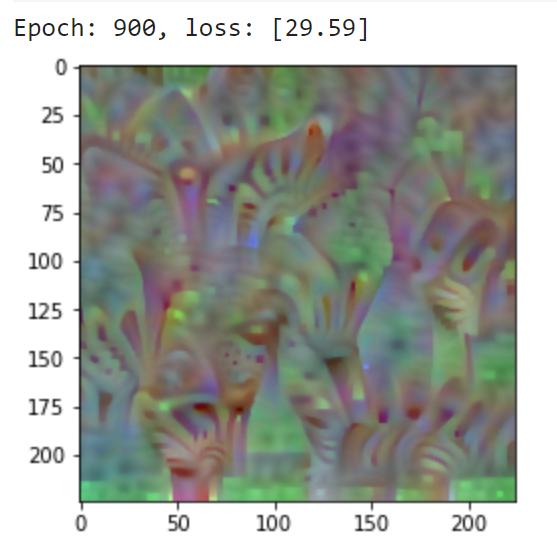

优化结果:用梯度下降优化,将网络看成猫

绘图:

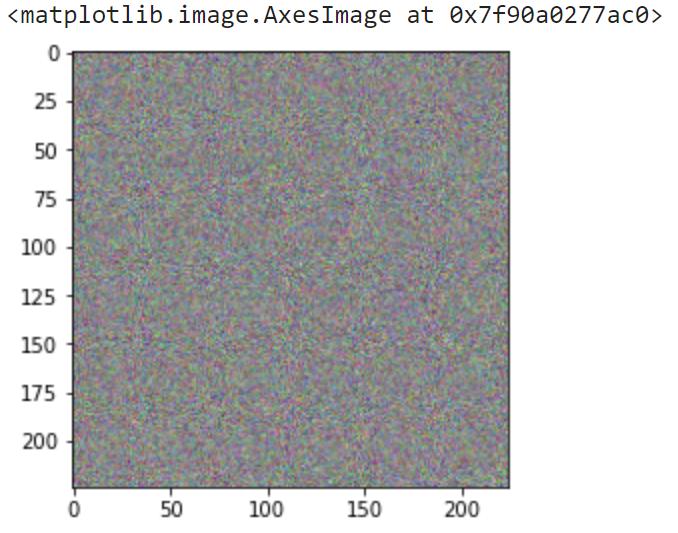

x = tf.Variable(tf.random.normal((1,224,224,3)))

def normalize(img):

return (img-tf.reduce_min(img))/(tf.reduce_max(img)-tf.reduce_min(img))

plt.imshow(normalize(x[0]))

结果:

用规格化normalize()围在0-1

代码:

def plot_result(x):

res = model(x)[0]

cls = tf.argmax(res)

print(f"Predicted class: {cls} ({classes[cls]})")

print(f"Probability of predicted class = {res[cls]}")

fig,ax = plt.subplots(1,2,figsize=(15,2.5),gridspec_kw = { "width_ratios" : [1,5]} )

ax[0].imshow(normalize(x[0]))

ax[0].axis('off')

ax[1].bar(range(1000),res,width=3)

plt.show()

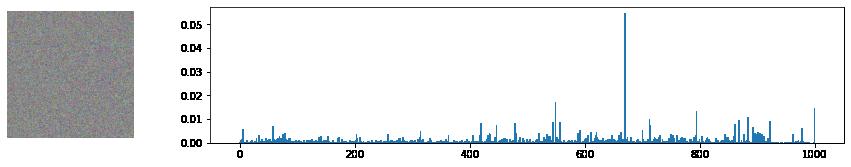

plot_result(x)

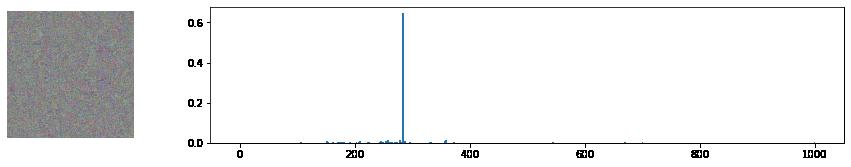

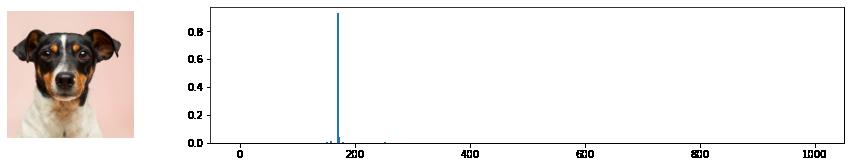

结果;

Predicted class: 669 (mosquito net)

Probability of predicted class = 0.05466596782207489

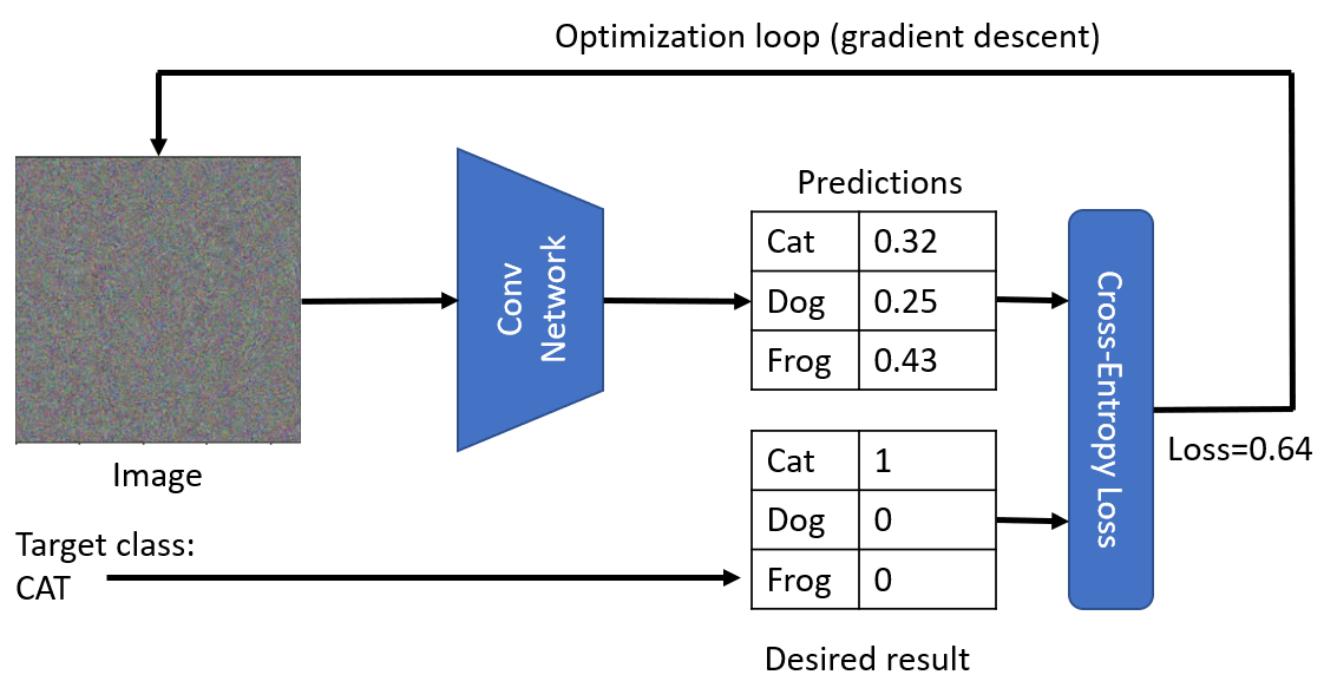

用VGG调整图像类别概率步骤:

-

选类别,用梯度下降调整图像x(网络V预测类的概率最大化)

-

用交叉熵损失L=L(c,V),c=目标类别索引

-

梯度下降更新:套公式

-

迭代优化:重复更新epoch,不断调整观察

上图的突出,实际概率可能只有5%(看坐标轴范围定概率),计算用GPU加速,资源有限就减少epoch

target = [284] # Siamese cat

def cross_entropy_loss(target,res):

return tf.reduce_mean(keras.metrics.sparse_categorical_crossentropy(target,res))

def optimize(x,target,epochs=1000,show_every=None,loss_fn=cross_entropy_loss, eta=1.0):

if show_every is None:

show_every = epochs // 10

for i in range(epochs):

with tf.GradientTape() as t:

res = model(x)

loss = loss_fn(target,res)

grads = t.gradient(loss,x)

x.assign_sub(eta*grads)

if i%show_every == 0:

clear_output(wait=True)

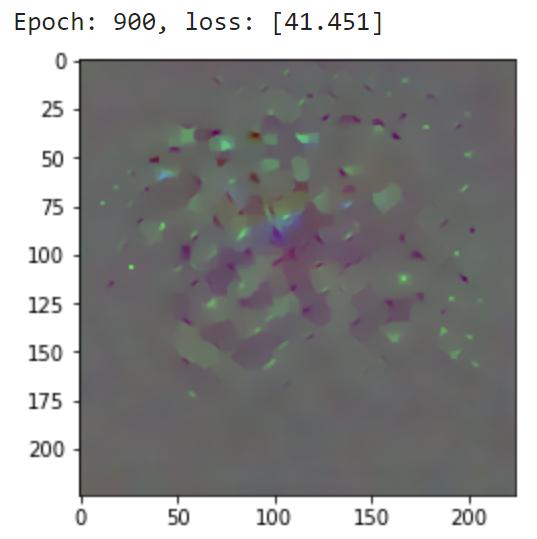

print(f"Epoch: {i}, loss: {loss}")

plt.imshow(normalize(x[0]))

plt.show()

optimize(x,target)

绘图:

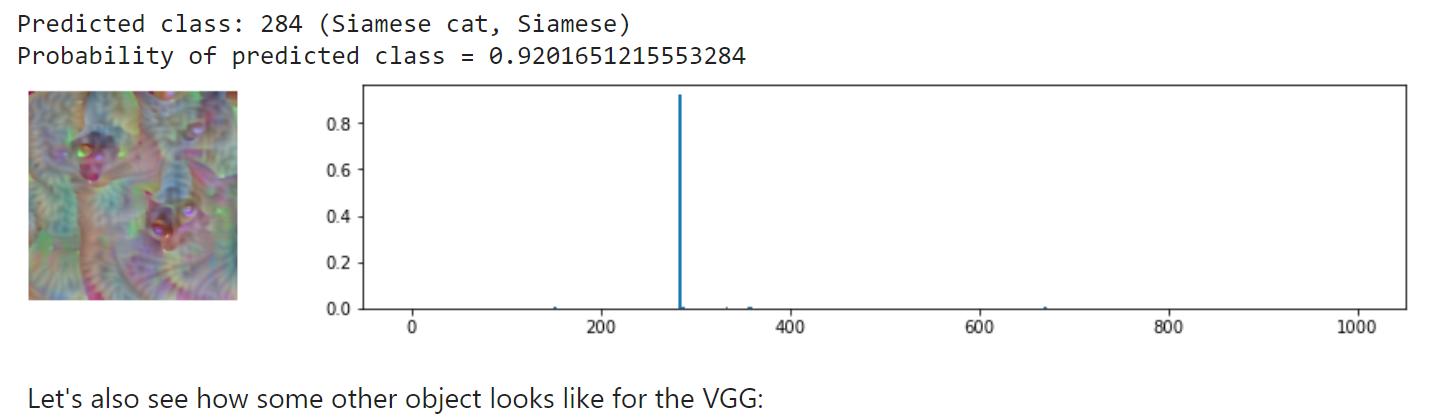

Predicted class: 284 (Siamese cat, Siamese) Probability of predicted class = 0.6449142098426819

plot_result(x)

def total_loss(target,res):

return 10*tf.reduce_mean(keras.metrics.sparse_categorical_crossentropy(target,res)) + \

0.005*tf.image.total_variation(x,res)

optimize(x,target,loss_fn=total_loss)

上图需要降噪

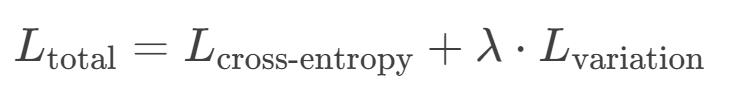

减少噪声的方法:用变化损失:衡量相邻像素差

公式(拉乘):总损失函数=交叉熵损失(分类目标)+λ*变压损失(平滑约束)

步骤:算交叉熵损失 - 算变化损失 - 联合优化(调整相似,总损失最小化)

对平衡系数λ的观察:太小=噪声明显,太大=模糊,分类概率下降;实践中初始值最好0.1-1.0,后面微调,用可视化结果判断最佳平衡点

绘图:

换一个物体:斑马

x = tf.Variable(tf.random.normal((1,224,224,3)))

optimize(x,[340],loss_fn=total_loss) # zebra

对抗攻击:

img = Image.open('images/dog-from-unsplash.jpg')

img = img.crop((200,20,600,420)).resize((224,224))

img = np.array(img)

plt.imshow(img)

绘图:

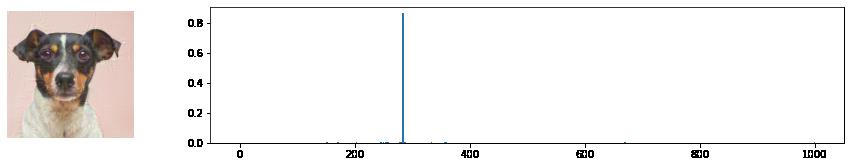

plot_result(np.expand_dims(img,axis=0))

把狗优化成猫:

x = tf.Variable(np.expand_dims(img,axis=0).astype(np.float32)/255.0)

optimize(x,target,epochs=100)

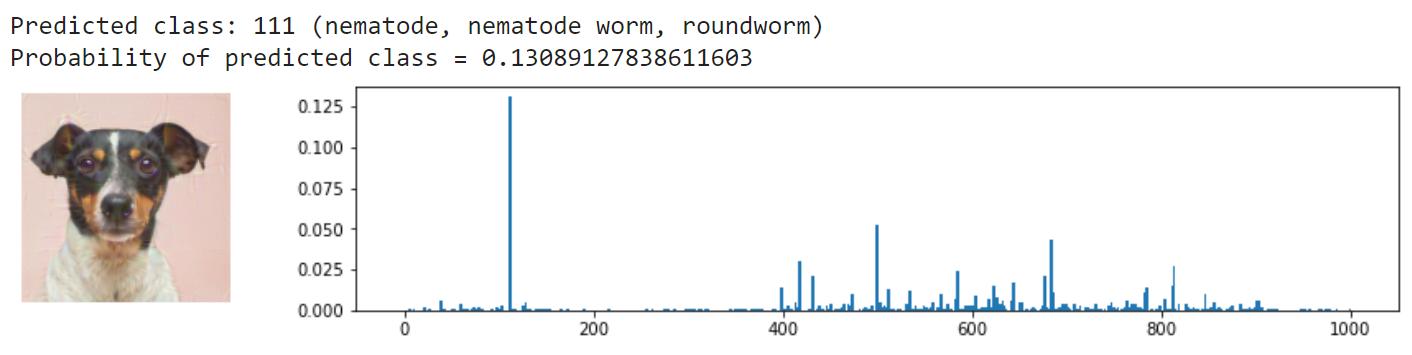

用ResNet:model作为全局变量,不用VGG

model = keras.applications.ResNet50(weights='imagenet',include_top=True)

绘图:

plot_result(x)

x = tf.Variable(tf.random.normal((1,224,224,3)))optimize(x,target=[340],epochs=500,loss_fn=total_loss)

输出:Epoch: 450, loss: [46.166]

绘图:

plot_result(x)

Predicted class: 340 (zebra)

Probability of predicted class = 0.8876020312309265

用不同的优化器:Keras内置很多优化器可代替梯度下降,将x.assign_sub(eta*grads)换apply)gradients()

def optimize(x,target,epochs=1000,show_every=None,loss_fn=cross_entropy_loss,optimizer=keras.optimizers.SGD(learning_rate=1)):

if show_every is None:

show_every = epochs // 10

for i in range(epochs):

with tf.GradientTape() as t:

res = model(x)

loss = loss_fn(target,res)

grads = t.gradient(loss,x)

optimizer.apply_gradients([(grads,x)])

if i%show_every == 0:

clear_output(wait=True)

print(f"Epoch: {i}, loss: {loss}")

plt.imshow(normalize(x[0]))

plt.show()

x = tf.Variable(tf.random.normal((1,224,224,3)))

optimize(x,[898],loss_fn=total_loss) # water bottle

扩展阅读:

-

dropout实验

-

TensorFlow在迁移学习中的运用

-

PyTorch在迁移学习中的运用

-

宠物真实照片分类