卷积神经网络:找图像中特定物体

准备工作:

import torch

import torch.nn as nn

import torchvision

import matplotlib.pyplot as plt

from torchinfo import summary

import numpy as np

from pytorchcv import load_mnist, train, plot_results, plot_convolution, display_datasetload_mnist(batch_size=128)

卷积过滤器:在每个像素移动的小窗口,计算邻接像素的平均W,提取物体pattern;由权系数矩阵定义;

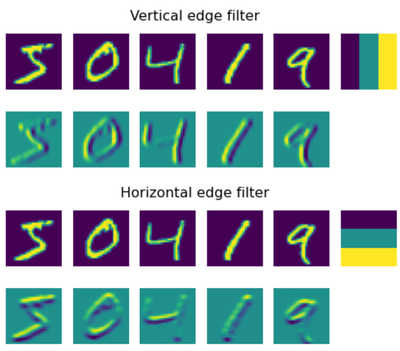

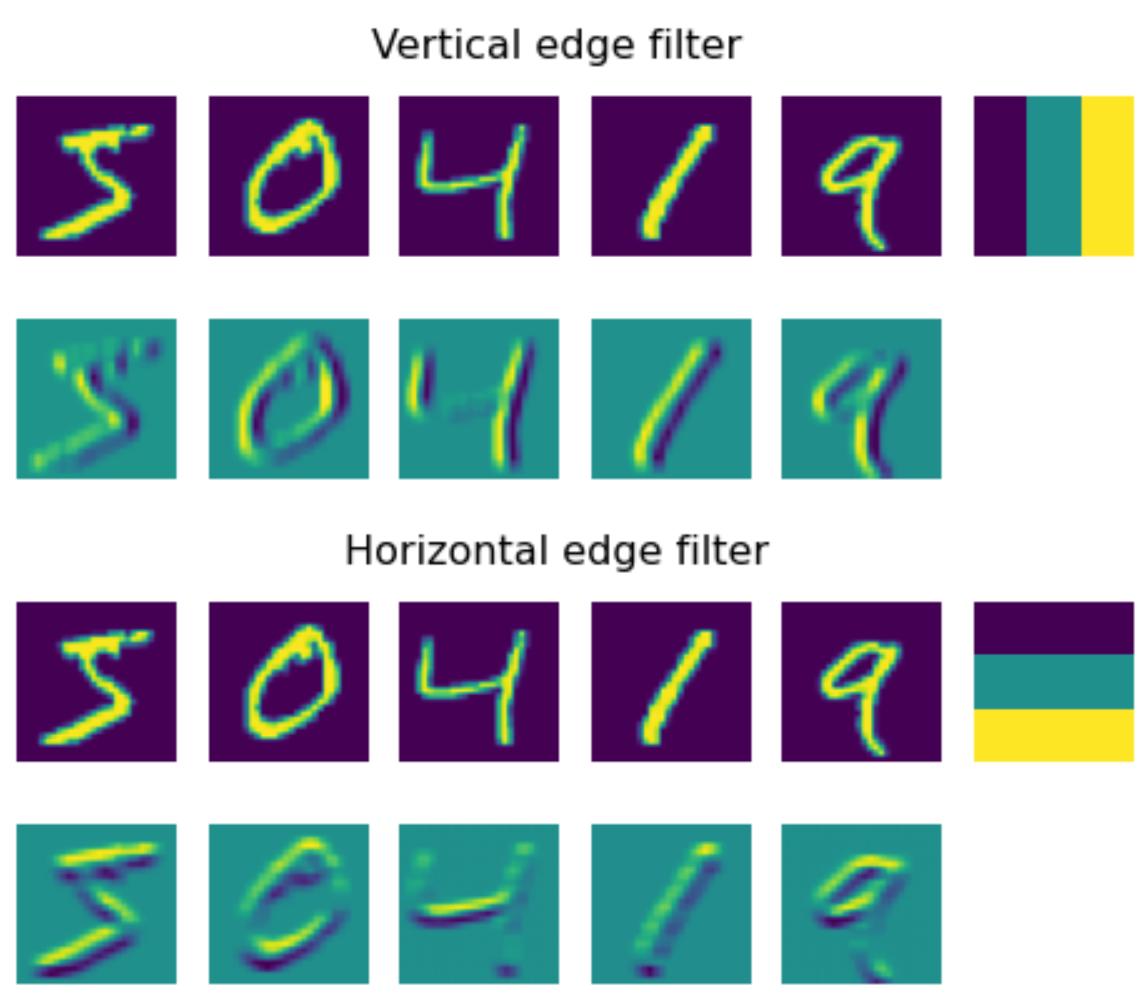

MNIST数字用到的两种过滤器(水平,垂直):

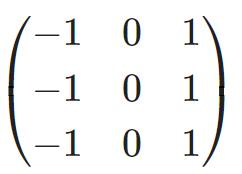

plot_convolution(torch.tensor([[-1.,0.,1.],[-1.,0.,1.],[-1.,0.,1.]]),'Vertical edge filter')plot_convolution(torch.tensor([[-1.,-1.,-1.],[0.,0.,0.],[1.,1.,1.]]),'Horizontal edge filter')

水平边缘过滤器:每处理一个均匀像素域就置零

碰到垂直边缘就置高值,水平边缘被平均掉;

垂直边缘过滤器:

反过来增强水平线条,垂直边缘被平均

传统CV vs 深度学习:

-

传统要手动设多滤波器,再特征提取,再分类器

-

深度学习的网络自动学习最优滤波器,再端对端分类

卷积层:nn.Conv2d;参数有3ge1(in_channels=1灰度输入通道数,out_channels=9用9个滤波器,kernel_size=5x5滑动窗口)

输出输入尺寸:28x28卷积后输出张量9x24x24(因为28-5+1=24)

网络结构:卷积层展平后9x24x24=5184维向量,变成线性层输出10类,中间用ReLU激活

总结:滤波器#决定特征提取,卷积核大小影响(不是决定)输出尺寸

代码:

class OneConv(nn.Module):

def __init__(self):

super(OneConv, self).__init__()

self.conv = nn.Conv2d(in_channels=1,out_channels=9,kernel_size=(5,5))

self.flatten = nn.Flatten()

self.fc = nn.Linear(5184,10)

def forward(self, x):

x = nn.functional.relu(self.conv(x))

x = self.flatten(x)

x = nn.functional.log_softmax(self.fc(x),dim=1)

return x

net = OneConv()

summary(net,input_size=(1,1,28,28))

输出:

========================================================================================== Layer (type:depth-idx) Output Shape Param # ========================================================================================== ├─Conv2d: 1-1 [1, 9, 24, 24] 234 ├─Flatten: 1-2 [1, 5184] -- ├─Linear: 1-3 [1, 10] 51,850 ========================================================================================== Total params: 52,084 Trainable params: 52,084 Non-trainable params: 0 Total mult-adds (M): 0.18 ========================================================================================== Input size (MB): 0.00 Forward/backward pass size (MB): 0.04 Params size (MB): 0.21 Estimated Total Size (MB): 0.25 ==========================================================================================

结果:网络含50k训练参数,相比80k全连接多层神经网络,可在小数据集存储更加结果

绘图:

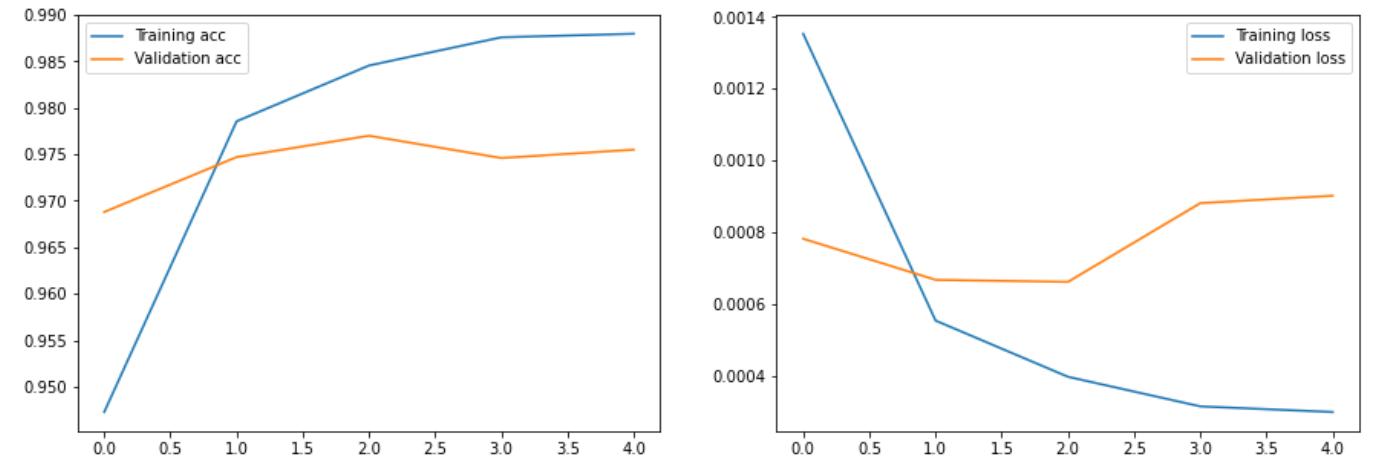

hist = train(net,train_loader,test_loader,epochs=5)

plot_results(hist)

结果:

Epoch 0, Train acc=0.947, Val acc=0.969, Train loss=0.001, Val loss=0.001

Epoch 1, Train acc=0.979, Val acc=0.975, Train loss=0.001, Val loss=0.001

Epoch 2, Train acc=0.985, Val acc=0.977, Train loss=0.000, Val loss=0.001

Epoch 3, Train acc=0.988, Val acc=0.975, Train loss=0.000, Val loss=0.001

Epoch 4, Train acc=0.988, Val acc=0.976, Train loss=0.000, Val loss=0.001

可视化训练卷积层的权:

fig,ax = plt.subplots(1,9)with torch.no_grad():

p = next(net.conv.parameters())

for i,x in enumerate(p):

ax[i].imshow(x.detach().cpu()[0,...])

ax[i].axis('off')

卷积层:检测图像pattern;过程:初层检测简单pattern(线条)-后续层结合pattern+检测复杂特征(形状)-最终层对物体分类

池化层:减少图像空间尺寸+降低计算量+防止过拟合,2种

-

平均池化:滑动窗口2x2pix算平均值

-

最大池化:用最大值替换窗口,检测pattern

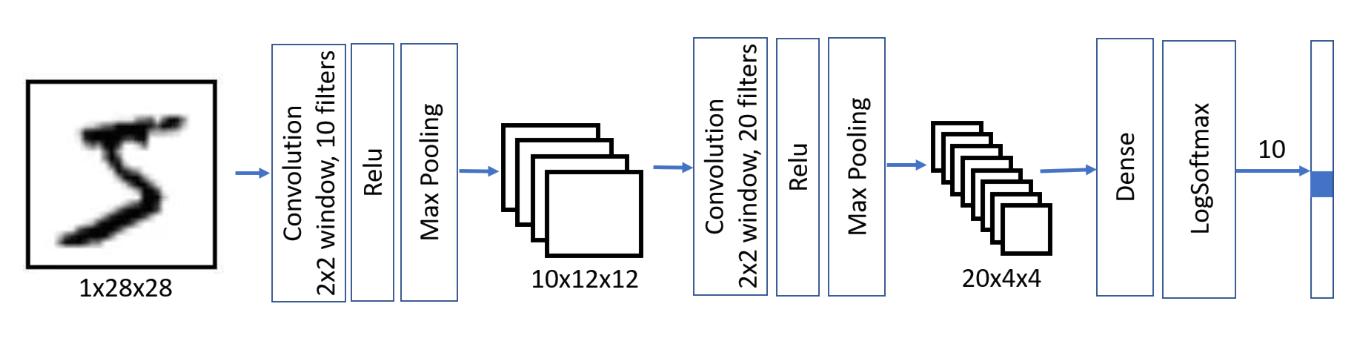

CNN架构:层次(在多卷积层间插入池化层),特征图(空间尺寸逐减,滤波器数逐增),输出(用全连接层+激活函数来分类)

金字塔架构:空间尺寸逐减,滤波器数逐增,用来高效提取分类特征

分析:输入1x28x28图像 - 第一卷积层 - 第二卷积层 - 输出(全输出层,LogSOftmax用来分10类)

(每个卷积层都是2x2窗口,10个滤波器,R额LU激活函数,最大池化)

代码:

class MultiLayerCNN(nn.Module):

def __init__(self):

super(MultiLayerCNN, self).__init__()

self.conv1 = nn.Conv2d(1, 10, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(10, 20, 5)

self.fc = nn.Linear(320,10)

def forward(self, x):

x = self.pool(nn.functional.relu(self.conv1(x)))

x = self.pool(nn.functional.relu(self.conv2(x)))

x = x.view(-1, 320)

x = nn.functional.log_softmax(self.fc(x),dim=1)

return x

net = MultiLayerCNN()summary(net,input_size=(1,1,28,28))

输出:

========================================================================================== Layer (type:depth-idx) Output Shape Param # ========================================================================================== ├─Conv2d: 1-1 [1, 10, 24, 24] 260 ├─MaxPool2d: 1-2 [1, 10, 12, 12] -- ├─Conv2d: 1-3 [1, 20, 8, 8] 5,020 ├─MaxPool2d: 1-4 [1, 20, 4, 4] -- ├─Linear: 1-5 [1, 10] 3,210 ========================================================================================== Total params: 8,490 Trainable params: 8,490 Non-trainable params: 0 Total mult-adds (M): 0.47 ========================================================================================== Input size (MB): 0.00 Forward/backward pass size (MB): 0.06 Params size (MB): 0.03 Estimated Total Size (MB): 0.09 ==========================================================================================

Flatten:在forward用view展平张量,不用单独层

Pooling:无参数可复用

参数:少,~8.5k,防止、过拟合

代码:

hist = train(net,train_loader,test_loader,epochs=5)

结果:一层精准度更高更快,只用1-2个epoch

Epoch 0, Train acc=0.952, Val acc=0.977, Train loss=0.001, Val loss=0.001

Epoch 1, Train acc=0.982, Val acc=0.983, Train loss=0.000, Val loss=0.000

Epoch 2, Train acc=0.986, Val acc=0.983, Train loss=0.000, Val loss=0.000

Epoch 3, Train acc=0.986, Val acc=0.978, Train loss=0.000, Val loss=0.001

Epoch 4, Train acc=0.987, Val acc=0.981, Train loss=0.000, Val loss=0.000

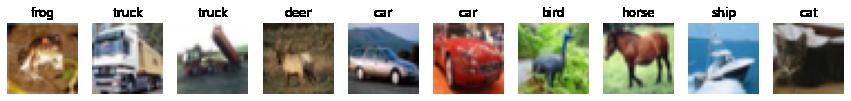

用CIFAR-10数据集:60000张图(32x32,10类:飞机-车-鸟-猫-鹿-狗-蛙-马-船-卡车,比手写数字复杂),常用架构:LeNet

transform = torchvision.transforms.Compose(

[torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform)trainloader = torch.utils.data.DataLoader(trainset, batch_size=14, shuffle=True)testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform)testloader = torch.utils.data.DataLoader(testset, batch_size=14, shuffle=False)classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

display_dataset(trainset,classes=classes)

LeNet架构:输入RGB,输出层不用long_softmax,直返全连接层输出,损失函数用CrossEntropyLoss优化模型

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.conv3 = nn.Conv2d(16,120,5)

self.flat = nn.Flatten()

self.fc1 = nn.Linear(120,64)

self.fc2 = nn.Linear(64,10)

def forward(self, x):

x = self.pool(nn.functional.relu(self.conv1(x)))

x = self.pool(nn.functional.relu(self.conv2(x)))

x = nn.functional.relu(self.conv3(x))

x = self.flat(x)

x = nn.functional.relu(self.fc1(x))

x = self.fc2(x)

return x

net = LeNet()

summary(net,input_size=(1,3,32,32))

opt = torch.optim.SGD(net.parameters(),lr=0.001,momentum=0.9)

hist = train(net, trainloader, testloader, epochs=3, optimizer=opt, loss_fn=nn.CrossEntropyLoss())

输出:3个epoch,复杂模型50%已算不错

Epoch 0, Train acc=0.261, Val acc=0.388, Train loss=0.143, Val loss=0.121 Epoch 1, Train acc=0.437, Val acc=0.491, Train loss=0.110, Val loss=0.101 Epoch 2, Train acc=0.508, Val acc=0.522, Train loss=0.097, Val loss=0.094

扩展阅读:

-

识别宠物脸部实验

-

卷积神经网络PyTorch试水

-

OPenCV实验

-

用光流实现手掌运动检测