import tensorflow as tf

from tensorflow import keras

import numpy as np

from sklearn.datasets import make_classification

import matplotlib.pyplot as plt

print(f'Tensorflow version = {tf.__version__}')

print(f'Keras version = {keras.__version__}')

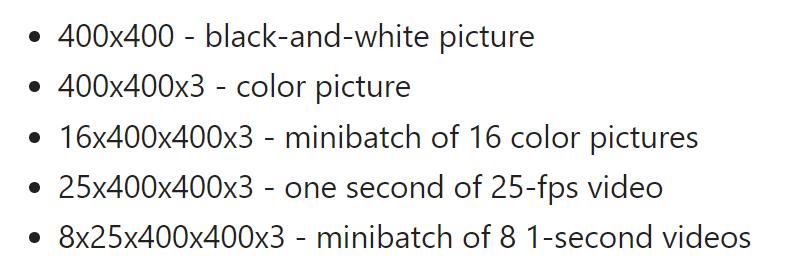

Tensor张量:多维数组,表示视觉数据(输入/输出)

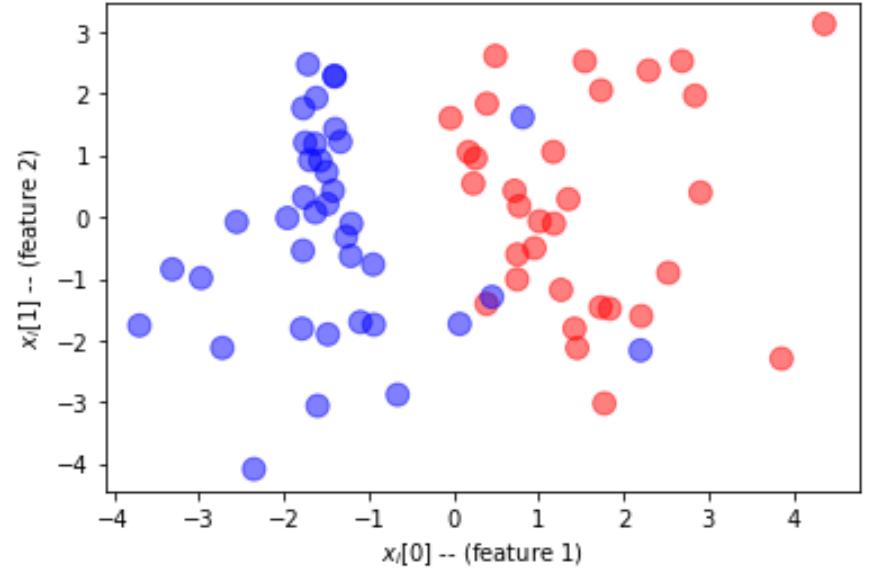

分类实验:

np.random.seed(0) # pick the seed for reproducibility - change it to explore the effects of random variations

n = 100X, Y = make_classification(n_samples = n, n_features=2, n_redundant=0, n_informative=2, flip_y=0.05,class_sep=1.5)

X = X.astype(np.float32)

Y = Y.astype(np.int32)

split = [ 70*n//100 ]

train_x, test_x = np.split(X, split)

train_labels, test_labels = np.split(Y, split)

def plot_dataset(features, labels, W=None, b=None):

# prepare the plot

fig, ax = plt.subplots(1, 1)

ax.set_xlabel('$x_i[0]$ -- (feature 1)')

ax.set_ylabel('$x_i[1]$ -- (feature 2)')

colors = ['r' if l else 'b' for l in labels]

ax.scatter(features[:, 0], features[:, 1], marker='o', c=colors, s=100, alpha = 0.5)

if W is not None:

min_x = min(features[:,0])

max_x = max(features[:,1])

min_y = min(features[:,1])*(1-.1)

max_y = max(features[:,1])*(1+.1)

cx = np.array([min_x,max_x],dtype=np.float32)

cy = (0.5-W[0]*cx-b)/W[1]

ax.plot(cx,cy,'g')

ax.set_ylim(min_y,max_y)

fig.show()

绘图:

plot_dataset(train_x, train_labels)

数据规格化:

train_x_norm = (train_x-np.min(train_x,axis=0)) / (np.max(train_x,axis=0)-np.min(train_x,axis=0))test_x_norm = (test_x-np.min(train_x,axis=0)) / (np.max(train_x,axis=0)-np.min(train_x,axis=0))

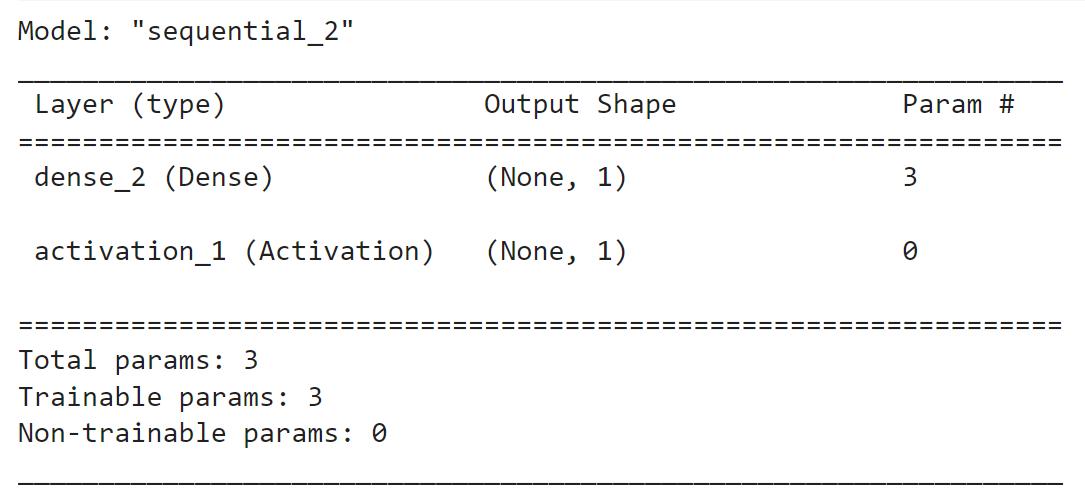

训练单层神经/感知器:

一个神经网络=一串层次,在Keras用Sequential模型定义

model = keras.models.Sequential()

model.add(keras.Input(shape=(2,)))

model.add(keras.layers.Dense(1))

model.add(keras.layers.Activation(keras.activations.sigmoid))

model.summary()

输出:

输入层(严格来说不是层):定义网络输入大小

压缩层:含有w的真实传感器

激活函数层:展示结果0-1

model = keras.models.Sequential()

model.add(keras.layers.Dense(1,input_shape=(2,),activation='sigmoid'))

model.summary()

model.compile(optimizer=keras.optimizers.SGD(learning_rate=0.2),loss='binary_crossentropy',metrics=['acc'])

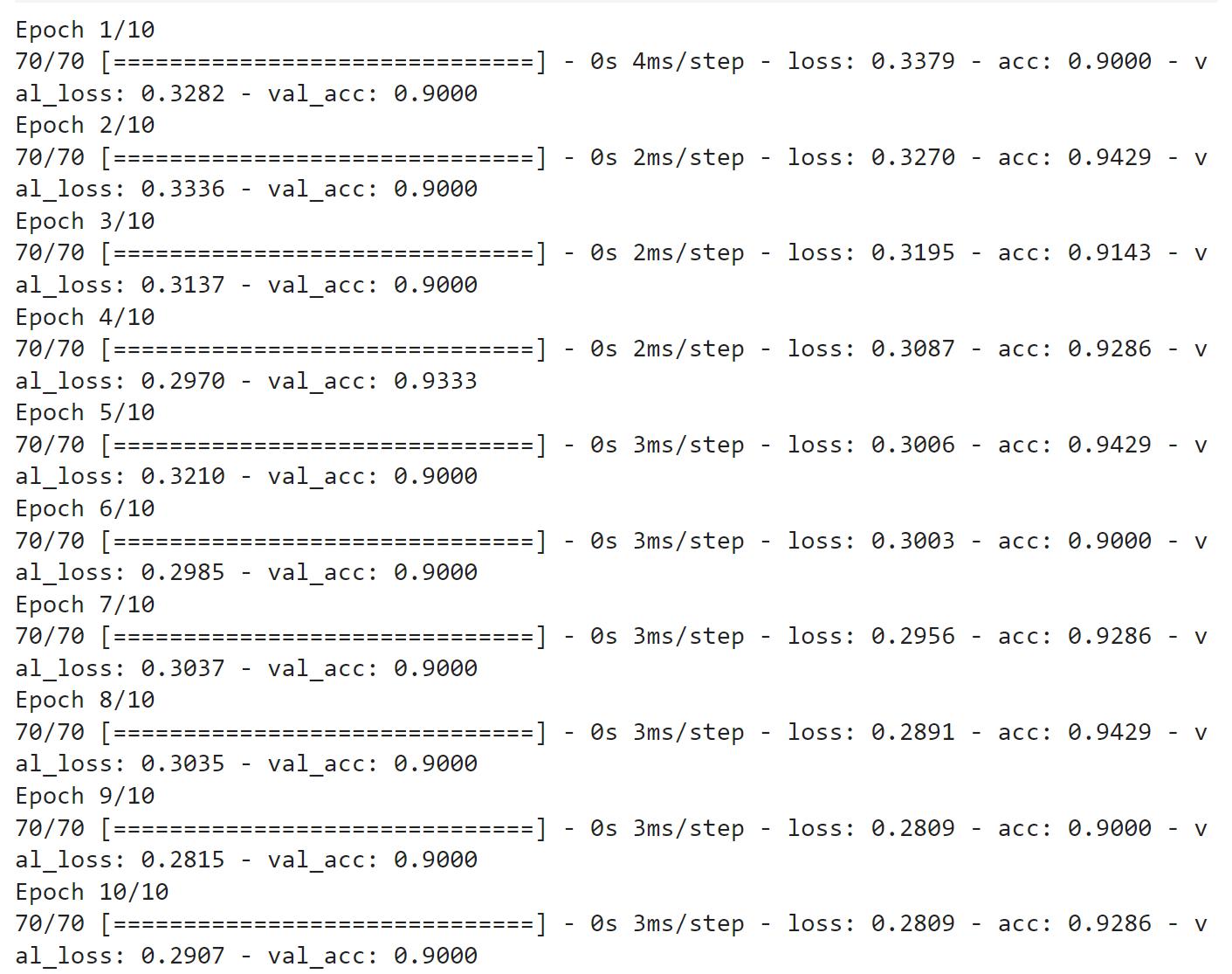

model.fit(x=train_x_norm,y=train_labels,validation_data=(test_x_norm,test_labels),epochs=10,batch_size=1)

运行过程:

输出:

<keras.callbacks.History at 0x2c420b89910>

绘图:

plot_dataset(train_x,train_labels,model.layers[0].weights[0],model.layers[0].weights[1])

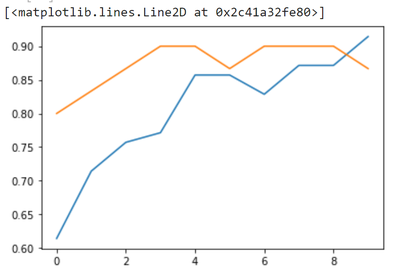

绘制训练图:

model = keras.models.Sequential([

keras.layers.Dense(1,input_shape=(2,),activation='sigmoid')])

model.compile(optimizer=keras.optimizers.SGD(learning_rate=0.05),loss='binary_crossentropy',metrics=['acc'])

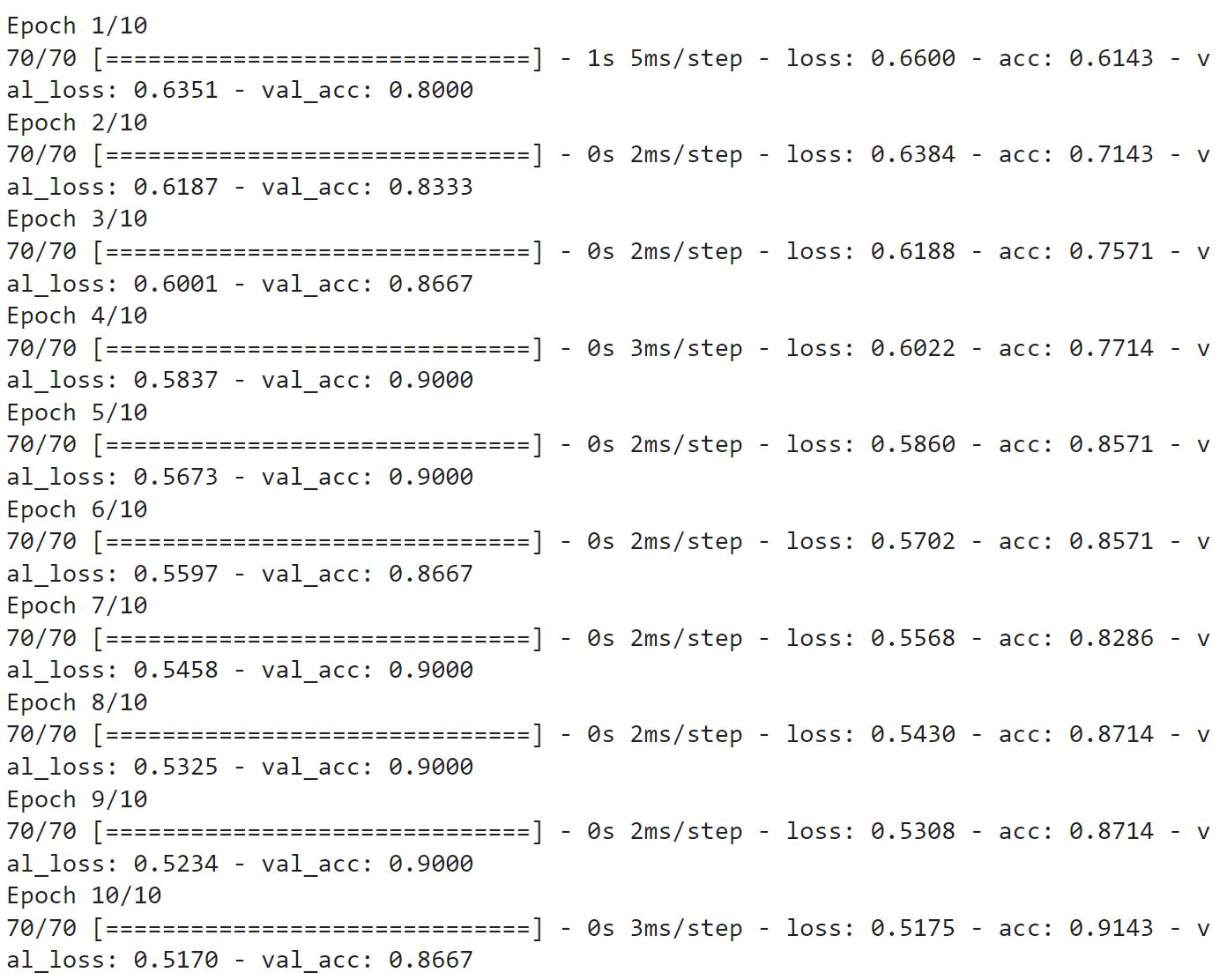

hist = model.fit(x=train_x_norm,y=train_labels,validation_data=(test_x_norm,test_labels),epochs=10,batch_size=1)

过程:

输入:

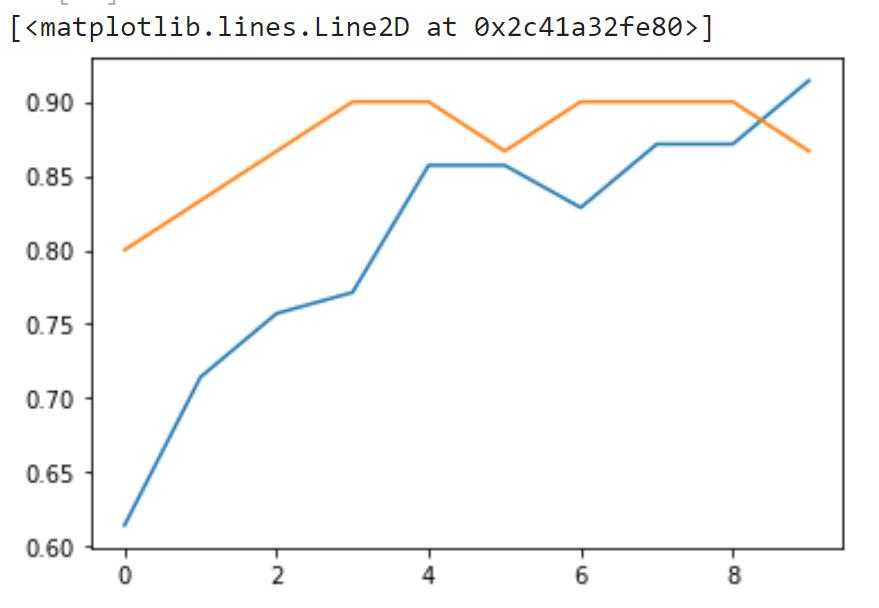

plt.plot(hist.history['acc'])

plt.plot(hist.history['val_acc'])

输出:

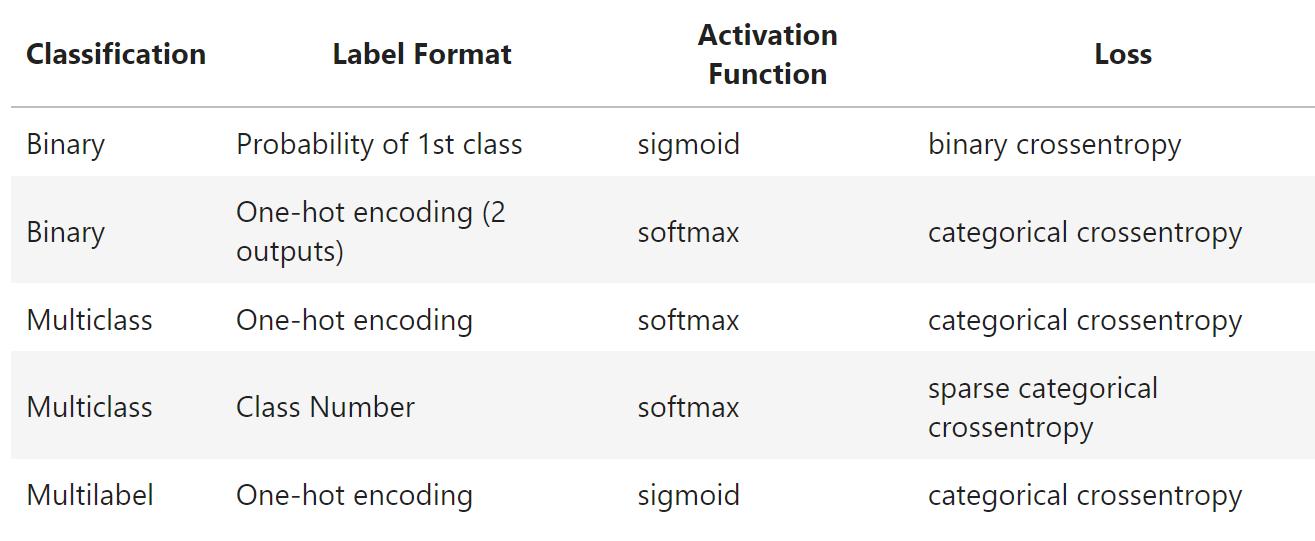

多类分类:

model = keras.models.Sequential([

keras.layers.Dense(5,input_shape=(2,),activation='relu'),

keras.layers.Dense(2,activation='softmax')])model.compile(keras.optimizers.Adam(0.01),'categorical_crossentropy',['acc'])

# Two ways to convert to one-hot encodingtrain_labels_onehot = keras.utils.to_categorical(train_labels)

test_labels_onehot = np.eye(2)[test_labels]

hist = model.fit(x=train_x_norm,y=train_labels_onehot,

validation_data=[test_x_norm,test_labels_onehot],batch_size=1,epochs=10)

过程:

Epoch 1/10

70/70 [==============================] - 1s 6ms/step - loss: 0.6524 - acc: 0.7000 - val_loss: 0.5936 - val_acc: 0.9000

Epoch 2/10

70/70 [==============================] - 0s 2ms/step - loss: 0.5715 - acc: 0.8286 - val_loss: 0.5255 - val_acc: 0.8333

Epoch 3/10

70/70 [==============================] - 0s 3ms/step - loss: 0.4820 - acc: 0.8714 - val_loss: 0.4213 - val_acc: 0.9000

Epoch 4/10

70/70 [==============================] - 0s 3ms/step - loss: 0.4426 - acc: 0.9000 - val_loss: 0.3694 - val_acc: 0.9333

Epoch 5/10

70/70 [==============================] - 0s 3ms/step - loss: 0.3602 - acc: 0.9000 - val_loss: 0.3454 - val_acc: 0.9000

Epoch 6/10

70/70 [==============================] - 0s 3ms/step - loss: 0.3209 - acc: 0.8857 - val_loss: 0.2862 - val_acc: 0.9333

Epoch 7/10

70/70 [==============================] - 0s 3ms/step - loss: 0.2905 - acc: 0.9286 - val_loss: 0.2787 - val_acc: 0.9000

Epoch 8/10

70/70 [==============================] - 0s 3ms/step - loss: 0.2698 - acc: 0.9000 - val_loss: 0.2381 - val_acc: 0.9333

Epoch 9/10

70/70 [==============================] - 0s 3ms/step - loss: 0.2639 - acc: 0.8857 - val_loss: 0.2217 - val_acc: 0.9667

Epoch 10/10

70/70 [==============================] - 0s 2ms/step - loss: 0.2592 - acc: 0.9286 - val_loss: 0.2391 - val_acc: 0.9000

系数分类交叉熵损失:

model.compile(keras.optimizers.Adam(0.01),'sparse_categorical_crossentropy',['acc'])

model.fit(x=train_x_norm,y=train_labels,validation_data=[test_x_norm,test_labels],batch_size=1,epochs=10)

过程:

Epoch 1/10 70/70 [==============================] - 1s 6ms/step - loss: 0.2353 - acc: 0.9143 - val_loss: 0.2190 - val_acc: 0.9000 Epoch 2/10 70/70 [==============================] - 0s 3ms/step - loss: 0.2243 - acc: 0.9286 - val_loss: 0.1886 - val_acc: 0.9333 Epoch 3/10 70/70 [==============================] - 0s 2ms/step - loss: 0.2366 - acc: 0.9143 - val_loss: 0.2262 - val_acc: 0.9000 Epoch 4/10 70/70 [==============================] - 0s 2ms/step - loss: 0.2259 - acc: 0.9429 - val_loss: 0.2124 - val_acc: 0.9000 Epoch 5/10 70/70 [==============================] - 0s 2ms/step - loss: 0.2061 - acc: 0.9429 - val_loss: 0.2691 - val_acc: 0.9000 Epoch 6/10 70/70 [==============================] - 0s 2ms/step - loss: 0.2200 - acc: 0.9286 - val_loss: 0.2344 - val_acc: 0.9000 Epoch 7/10 70/70 [==============================] - 0s 3ms/step - loss: 0.2133 - acc: 0.9286 - val_loss: 0.1973 - val_acc: 0.9000 Epoch 8/10 70/70 [==============================] - 0s 3ms/step - loss: 0.2062 - acc: 0.9429 - val_loss: 0.1893 - val_acc: 0.9000 Epoch 9/10 70/70 [==============================] - 0s 3ms/step - loss: 0.2060 - acc: 0.9571 - val_loss: 0.2719 - val_acc: 0.9000 Epoch 10/10 70/70 [==============================] - 0s 3ms/step - loss: 0.2021 - acc: 0.9571 - val_loss: 0.2293 - val_acc: 0.9000

输出:

<keras.callbacks.History at 0x2c42267de80>

多标签分类: -

总结:

相似文章:

-

Keras在MNIST实验的应用

-

2024/2/3 - 神经网络实验 - Keras+Tensorflow 2.x试水

-

2024/2/3 - 神经网络实验 - PyTorch试水